Background

A PeopleSoft HRMS 8.8 system running on SQL Server 2008 R2 SP2 that is used as a reporting environment. Data is copied each night from production and a select but limited group of users run queries against it.

The system performance was both terrible and unpredictable. There was a “rule” in place that queries that ran for over two hours were cancelled. Two minutes seemed a long time for the data volumes involved to me.

Analysis

There were lots of things wrong with the instance setup such as default values for MAXDOP and “Cost threshold for Parallelism”, as well as only one TempDB file and unlimited RAM allocated to SQL server. Essentially, an out of the box SQL server install. Not even a Cumulative Update had been applied. In addition, SQL server had 128 GB of RAM and 16 Processors allocated to it so it was hardly short of resources.

But none of that, in itself, really explained the very poor performance of many of the user queries in PeopleSoft. Especially given that running the exact same query in SSMS gave completely different (and quite fast) results. The lack of indexing on the underlying tables and unnecessary cartesian products in the PeopleSoft query security views made the queries less than quick, but they still typically completed in a few seconds or minutes in SSMS. They often never finished in PS query either on-line or scheduled – even after 5 or 6 hours.

Now this database is a very old HRMS 8.8 system that has been upgraded from SQL Server 2000 and various old Peopletools releases up to the giddy heights of 8.49.28 🙂

After much investigation and running SQL Server Profiler along with Adam Machanic’s superb sp_WhoIsActive (read more about it from Brent Ozar here) it turned out to be all related to the setting of ARITHABORT – a well known but I think poorly understood issue.

There are numerous articles on the internet about the issues of SQL running faster in SSMS than via other clients. I leave you to search for those yourself, but to summarise:

You must make sure the connection settings you use in SSMS match those used by the application. Otherwise the query plan you see in SSMS may not bear any similarity to the one the application is using. This makes problem solving nearly impossible.

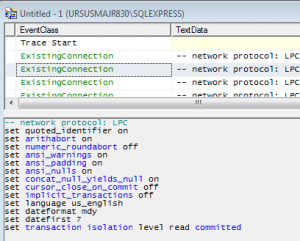

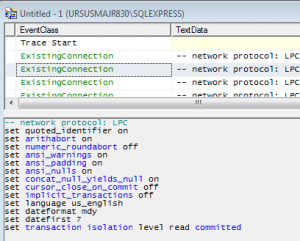

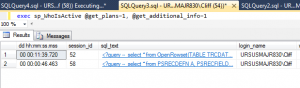

Profiler will help you identify the options set at the connection level:

And using:

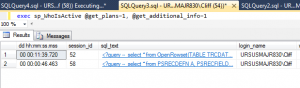

EXEC sp_WhoIsActive @get_plans=1 |

exec sp_WhoIsActive @get_plans=1

will give you the session and the query plan which you can open and compare to the same plan in SSMS. If they are different you need to investigate why.

ARITHABORT

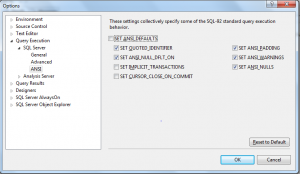

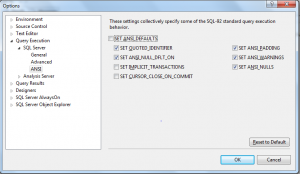

ARITHABORT is query plan affecting and a query plan “cache key” – so you will not get the same query plan in SSMS as the client application if the values are different. SQL server will have two plans for the same SQL statement. Remember as well that SSMS issues SET commands as defined in Tools -> Options -> SQL Server -> Query Execution:

ARITHABORT in PeopleSoft

Now the interesting thing about ARITHABORT is that the standard Peoplesoft install scripts create the Peoplesoft database and then issue two ALTER DATABASE commands:

ALTER DATABASE <DBNAME>

SET ARITHABORT ON

GO

ALTER DATABASE <DBNAME>

SET QUOTED_IDENTIFIER ON

GO |

ALTER DATABASE <DBNAME>

SET ARITHABORT ON

go

ALTER DATABASE <DBNAME>

SET QUOTED_IDENTIFIER ON

go

where <DBNAME> is your database name.

The install guide also tells you to ensure that QUOTED_IDENTIFIER ON is specified for any client connections to a Peoplesoft database. It goes on to explain that this can be set in the ODBC connection setup. What it fails to mention is setting ARITHABORT for client connections. This should also be set but you cannot set it on the ODBC connection. Best to set it at the INSTANCE connection level:

EXEC sys.sp_configure N'user options', N'64'

GO

RECONFIGURE WITH OVERRIDE

GO |

EXEC sys.sp_configure N'user options', N'64'

GO

RECONFIGURE WITH OVERRIDE

GO

Now assuming you have done that, and you have the default SSMS settings in place, the query execution plan you see for the PeopleSoft application via ODBC should match the one you get in SSMS. And, more importantly the plan that gets chosen will be a much better plan than with ARITHABORT OFF in a great many cases.

Now in this specific case it seems that someone created the original database without using the supplied script and so they did not set either of these default settings at the database. For QUOTED_IDENTIFER they did at least stick with the ODBC connection defaults so that was always ON. But sadly ARITHABORT was not.

The Fix

A quick ALTER database and a re-configure of SQL server, drop the Peoplesoft Application Servers and a SQL Server instance re-start and the problem was solved. It didn’t make every query faster but it did improve the vast majority. The ones it did not fix are typically the ones with missing joins 🙂 Now all I need to do is create some indexes that reflect the way the data is accessed and re-write the query security views to be more efficient and I should be able to get all the queries under two minutes. Well … those with joins between the tables … ….

NOTE: You could and perhaps **should** include QUOTED_IDENTIFER in that user options setting for extra safety (use 320 instead of 64 in the above sp_reconfigure). Just in case someone un-ticks that option whilst setting up ODBC.